Artists in the performing and theatrical arts currently have access to technology of an unprecedented variety and complexity in comparison to classical methods. Without necessarily dismissing traditions, artists can work with devices that originally had no artistic application, such as virtual humans and robots. Before attempting to understand how artists appropriate these devices and how this changes the creative process, it is important to describe the different types and their basic principles. These artificial beings are much more than materials and tools that are slightly more complicated than traditional ones. They are in fact of a totally different nature. They carry within them the seeds of a new aesthetic. Those who use them must work freely with this new aesthetic, but with an appreciation of its different nature.

From the Automaton to the Technological Being

Let’s start by examining the technical and functional principles of these technologies. Although diverse in their structures and functions, these devices share the use of computer models that tend to simulate life and intelligence, though at differing levels of perfection. Obviously, computer technology has profoundly revolutionized technology in general. It has introduced the abstract language of programming into processes that were essentially material or energy-based (mechanical, electrical, electronic). All computers are a hybrid of various circuits, processors, memory, sensors, actuators and algorithms expressed in a symbolic code that can be executed by a machine. In the early years of what was called “computer science,” the models came from mathematics, logic, physics, optics, and computer technology itself. But with the appearance of the cognitive sciences – a vast interdisciplinary group bringing together information technology, psychology, neuroscience, the theory of evolution, robotics, linguistics and different sectors of philosophy and social sciences – computers have become progressively able to simulate certain abilities that characterize living, intelligent creatures and their evolution.

Until the appearance of computers, the advancement of machines was measured by their degree of autonomy. The more that a machine could perform tasks without human intervention, the more it was considered technically superior. Though sometimes highly complex, the tasks these machines performed were entirely repetitive. This was clearly noted by Gilbert Simondon : “Automatism is a fairly low level of technical perfection.” (Simondon, 1969, p. 11) For this philosopher, a machine’s level of advancement does not correspond to its increase in automatism, but on the contrary, to the fact that its functioning contains a certain « margin of indeterminacy.” (Simondon, 1969, p. 11) With an abundance of this quality, for him computers are not “pure automatons,” but “technical beings” endowed with a certain openness and able to perform very different tasks. As examples, he cites machines that can extract square roots or translate a simple text.

Autonomy

Although the first computers were defined as machines capable of automatically processing information, we did not limit ourselves to asking them to automate certain, sometimes highly complex, tasks. We also entrusted them with the very ambitious mission of simulating the very thought processes of humans – our ability to reason, our intelligence. This became a special field within computer technology, Artificial Intelligence. The radical hypothesis at the base of this research, originally formulated by Alan Turing,1 rests on the idea that thought can be reduced to calculations and the manipulation of symbols. “Thinking is calculating,” Turing asserted. In this first conception of artificial intelligence,2 the results were only partially satisfactory. Many problems remained unresolved.

Then, instead of simulating intelligence with logical-mathematical processes, we began to try to discover how the human brain acquired this intelligence, and how the body contributed to the emergence and development of a different way of thinking. Virtual devices simulated by computer were equipped with methods of perception, action, and the coordination of actions and perceptions, so that they could react and adapt to unforeseeable modifications in their environment. Thanks to these algorithms, computers became capable of autoprogramming themselves thus acquiring a certain autonomy. If we consider the etymology of the term,3 they were able to establish their own rules and have a relative amount of freedom within a limited and very specific frame of action. These algorithms, combined with the progress in the machines, were and are largely indebted to the cognitive sciences4. They allow machines to perform operations that were simply not possible with the classic determinist programs. I will cite a recent example: the development by Google of an algorithm that learns to play online videogames like Space Invaders without the programmers having previously imparted the rules of these games. Consequently it functions as well as a human would under the same conditions. However, it cannot be anything but a good player. Its abilities do not extend any further.

The autonomy of a system can only be relative. Even humans are only partially autonomous, to the extent that we are subject to different pressures: physical environment, genetic inheritance, social constraints, etc. Moreover, many of our functions, particularly our vital functions, are (fortunately) regulated by powerful involuntary and unconscious automatisms, as are many of our mental processes and behaviors as well. Man is a highly evolved natural composite of automatism and autonomy. Thus it is possible to program anthropomorphic virtual beings or beings assembled out of concrete materials, endowing them with cognitive abilities that approach those of humans, such as a multimodal perception of the world, learning through trial and error, recognizing artificial or natural shapes (such as faces), the development of an associative memory, decision-making followed by action, intentionality, inventiveness, the creation of new information, the simulation of emotions and empathy, and social behavior.

Avatars

Artificial humans fall into two broad categories: virtual humans and robots. While they share many basic technical principles, these devices have distinct modes of existence and different degrees of autonomy. Virtual humans, also known as “avatars,” do not possess any material form. They are projections of images. Their mode of existence consists of representing a human, in a virtual form, within a virtual space, such as cyberspace or virtual and augmented reality systems. However, in cyberspaces like Second Life (first and second versions) or online video games, avatars are not always faithful representations of the player, but imagined representations that allow the player to appear in disguise, under another identity, even as another sex, and play a role incognito. The avatar can also take the form of an animal or a purely imaginary being.

The simplest of these beings – which possess zero level of autonomy, but is not an automaton – is capable of reproducing its controller’s movements and gestures in real time. The avatar is the user’s double, simultaneously augmented and reduced. It is augmented in the sense that it can achieve impossible actions in the virtual world (flying, walking on water, becoming invisible), and reduced in the sense that it is only a very incomplete simulation of its user. These avatars can also be directed by their users, but then their movements no longer correspond exactly to what the user does. They are comparable to puppets that the puppeteer guides with strings. The movements of the puppet do not correspond to the movements of the puppeteer’s hand. However, this difference does not necessarily confer autonomy5.

Autonomy in avatars appears in the category of “autonomous actors,” endowed with behaviors that allow them to act in a relatively autonomous way in order to accomplish their tasks. They see, hear, touch, and move by means of sensors and virtual actuators. Daniel Thalmann, a specialist in virtual humans, affirms they can even play tennis by basing their game on that of their adversary. Therefore, after interpreting information from their environment, they can make decisions and act accordingly.

At a more advanced level of autonomy, there are the actors that possess a representation of the world that surrounds them – objects, other synthesized actors, avatars representing humans – and are able to communicate with them. Virtual universes are far more credible when they are inhabited by autonomous avatars. This communication brings into play a capacity to interpret and use a nonverbal language based on body postures expressing an emotional state. Nonverbal communication is essential in order to direct interactions between synthetic beings; it is an expression of the body’s thought.

Robots

Artificial humans classified as robots are material devices, they have bodies that can be seen and touched; they act on real objects. The term “robot” was coined by a playwright in the early 1920s and designated humans that were artificial, but organic in constitution6. Subsequently, the term was largely used to designate mechanical humans on non-humanoid devices capable of replacing humans in certain tasks. Robots are an extension of a mythic quest that extends far into the past. In modern culture they appear as man’s double under two opposing aspects: a threatening aspect (for example, robots stealing work from humans) or a benign aspect (as a friend to children and the handicapped). The robot distills all our fears and hopes when we are faced with poorly socialized or misunderstood advances in technology.

At the level of zero autonomy, we find robots whose functions are strictly repetitive: for example, painting robots in the automotive industry, or cleaning robots. At a higher level, we find machines, usually humanoid or animal in shape, endowed with an element of autonomy. The difference between autonomous robots themselves lies essentially in two parameters: their level of autonomy and their field of action. There are no universal robots whose autonomy allows them to solve any task, and we are not ready to have such robots (note that we ourselves are not skilled at solving any and all tasks). Nevertheless, certain robotics researchers believe that a form of consciousness could be simulated in robots and facilitate the emergence of a very high level of intentionality and decisional autonomy. The programming of such devices is often inspired by algorithms that have emerged from artificial intelligence and the theory of evolution, and are used in the creation of virtual humans.

Endowing robots with a level of autonomy that approaches that of humans in their actions and their relationships with other intelligent entities would require them to possess similar cognitive abilities (reasoning, evaluation, decision making, ability to adapt to situations, ability to formulate a strategy that takes into account past, present and future experiences, etc.). These robots would also have to be capable of expressing emotions (though it would not be necessary for them to actually feel the emotions), and even express empathy (currently the subject of specialized research), if they are to evolve with other intelligent entities.

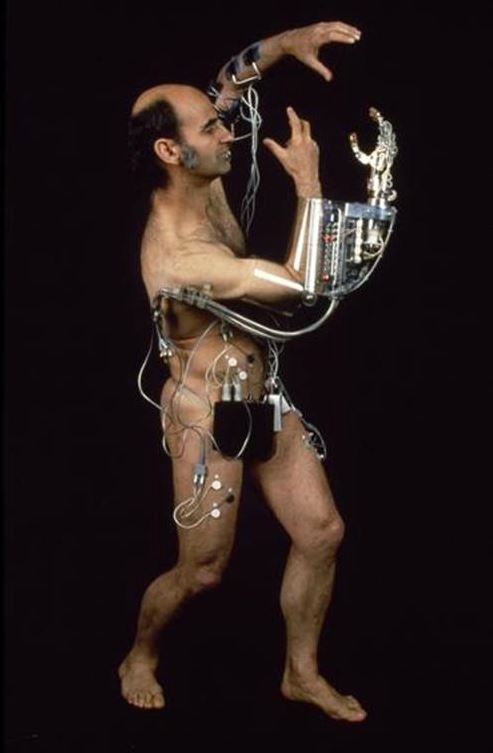

There is an intermediate category between robots and humans, cyborgs – “a human being,” according to Anaïs Bernard and Bernard Andrieu, “who has received mechanical or biological grafts, blending digital, logistical and robotic technology. Exoskeletons and prostheses make the individual’s body a locus of hybridization and transformation, with the aim of recreating new perceptions of his environment through a modification of the notions of space and time,” (Bernard, 2015) These grafts, usually utilitarian in nature, can become the objects of aesthetic experiences, like Stelarc’s The Third Hand (1980), which gave internet users the chance to use electrical discharges to stimulate an electronic hand grafted into the forearm of an artist.

According to the International Federation of Robotics, in 2013 approximately four million personal-use robots were sold worldwide, and 31 million more will be sold between now and 2017. Although it is impossible to predict the specific uses for which they will be designed, we can expect that they will be principally intended to entirely or partially replace humans in dangerous or difficult situations, or in hostile locations (military and nuclear applications are the subject of considerable attention). They will assist the handicapped (robotic devices may even be piloted by thought, as certain prototypes already are); they will rescue, diagnose and treat accident victims, and they will be intelligent, empathetic and sympathetic companions to the elderly and to children. The applications are innumerable, and some are already functioning with a degree of success at this very moment. It’s as though humans, consciously or unconsciously, by externalizing the cognitive abilities that are unique to them (as they did with the tool), as well as externalizing their emotional and empathetic faculties, were in the process of bringing into the world a new, very closely related human species, a sort of human co-species7. Although at present none of these artificial beings succeeds at being indistinguishable from a human, the tendency is to move closer and closer to this model. Some researchers, such as philosopher Gérard Chazal (Chazal, 1995, p. 68) or evolutionary biologist Richard Dawkins even foresee that in a not improbable future, computers will acquire self-awareness. The technology that underlies these devices no longer merely constitutes a space for man; it is creating the conditions for contact with quasi-living artificial beings. From an evolutionary standpoint, we have introduced an unexpected change in the direction of natural evolution, and substituted the voluntary choices of science with all its utopian possibilities and risks, for the laws of natural selection, driven partly by chance. Evolution never stops moving forward.

The Natural Human / Artificial Human Aesthetic Relationship

It is from among this growing race of artificial humans that resemble us more and more that artists from the performing arts and the theater recruit partners and accomplices, rather than zealous servants. Historically, the use of artificial humans in these arts has been inscribed within the movement to de-specify art, along with the movement of de-definition8 of art (led by Harold Rosenberg) that began with the end of traditional techniques practiced in the fine arts. Henceforth, all techniques can be used for artistic ends. However, while de-specification spread the field of creation across unlimited possibilities (at the risk of its own disappearance), the introduction of the digital technologies inherent in artificial humans has tended to reunite the field in a fairly coherent and structured whole, despite the variety of elements that make it up.

The aesthetic approach to relationships between natural humans and artificial humans profoundly changes the nature of these relationships. They are no longer evaluated in terms of the power and speed of calculations, of efficiency in communicating, or of tasks to be accomplished, but in terms of pleasure – a pleasure unique to the aesthetic experience, a pleasure accompanied by a large palette of emotions, moods and thoughts. Consequently, debates such as that surrounding the “uncanny valley” (the theory that the more a robot resembles a human, but without succeeding completely, the less empathy we feel for it) no longer have a foundation, since the imperfections of such a robot may be voluntarily created by its maker not in order to provoke discomfort in the audience, but to incite aesthetic pleasure. Art’s paradox is to transform ugliness into beauty.

Avatars and robots have their own aesthetic. When we observe these devices in action, our attention focuses on the forms (physical forms, but also attitudes, gestures, and eventually the expression of emotions) that reveal, or seem to reveal, their autonomy. We watch for the slightest hint that confirms or disproves that they share something with us. We live a singular aesthetic experience that makes us feel empathy for them—an empathy that increases in proportion to the degree that we recognize this “something.” Autonomy and empathy are related. Indications of autonomy in an artificial human stimulate the empathy of human observers and lead them to mentally project themselves in place of the device.

Empathy and CoPresence

Empathy is a mental and physical state that simulates the subjectivity of another; it is the capacity to put oneself in another’s place, while keeping one’s own identity,9 according to therapist Jean Decety. Empathy requires two fundamental components. The first is an emotional resonance and motor resonance that is automatically triggered, uncontrollable and unintentional. We only understand another’s gestures and emotions to the extent that we mentally simulate them in a sort of “shared body state” – a neural mechanism called “unmediated resonance,” (Gallese) shared by observer and observed. The second component is a controlled and intentional taking of another’s subjective perspective. I would add a third component to empathy: temporal resonance. Empathy also makes us share the moment of another; it plunges us into the same temporal flux. It makes us copresent with the other. Reciprocally, we feel a being as another if we are unable to enter into motor, emotional, subjective, and temporal resonance with them.

According to psychotherapist Daniel Stern,

The mind is always incarnated in a person’s sensorimotor activity. It is interwoven with the immediate physical environment that co-creates it. It is constructed through its interactions with other minds. It draws and maintains its shape and its nature from this open exchange. It emerges and exists only because of its continual interaction with intrinsic cerebral processes, with the environment, and thus other minds. (Stern, 2003 p. 119)

For each one of us, a community of spirits brought together in an “intersubjective matrix” is the source of our mental life and gives our minds their current shape. (Stern, 2003, p. 100)

Paradoxically, we can also feel empathy for an object – a piece of architecture, a painting – under certain conditions. The philosopher Adam Smith was the first to talk about empathy in regard to theater (though he did not use the word empathy, which did not exist in his era, but the closely related word sympathy10). For him, in order for the spectators of a play to understand the actions of the characters, they must identify with the actor, project themselves on him, and adopt his point of view, while from the other side, the actor must identify with the spectators in order to feel their reactions and emotions. Here Adam Smith perceived the double communication (we would say an intersubjective communication) that determines a good reception of the meaning of a play. The idea of empathy would largely inspire the aesthetic of the end of the nineteenth century. Theodor Lipps renamed empathy under the term Einfühlung. “All aesthetic pleasure,” he affirmed in Äesthetik, (Lipps, 1903)“is uniquely and simply founded on Einfühlung.” “Aesthetic pleasure is the objectified pleasure of the self.” To enjoy something aesthetically is to make one’s perception an object of pleasure. The idea of empathy then underwent a certain level of disinterest, but it resurfaced a few years ago because of the contribution of the cognitive sciences and the discovery of mirror neurons in particular. For neuroscientist Vittorio Gallese and art historian David Freedberg11 as well as for neurobiologist Pierre Changeux, (Changeux, 1994, p. 46) empathy is at the foundation of the aesthetic reception of works of art. Without stating that it is the sole foundation for this reception (aesthetic pleasure is generated through other neurological paths) I nevertheless believe that it constitutes the natural system (a phylogenetic inheritance) that determines the emergence of aesthetic pleasure in a subject12.

Immersion, Motor Resonance, and Temporal Resonance

The question is this: What changes will the presence of artificial humans endowed with autonomy provoke in aesthetic reception? Admittedly, the majority of artificial humans created for artistic ends are not truly autonomous, but (in this lies all the skill of their creators) give the illusion of being so. All that is necessary is a tiny detail in their behaviors, a seeming hesitation (in reality, a controlled one), for the audience to believe, or want to believe, that the artificial human possesses some spark of life or intelligence. During a twenty-minute performance13, the Japanese artist Oriza Hirata placed two vaguely anthropomorphic cleaning robots that were complaining about their jobs alongside two humans on stage. The programming, which was highly difficult because of the precision necessary in the vocal and gestural dialogue, is entirely automatic. The slightest gesture, the slightest silence, the slightest vocal nuance is pre-planned. The robots possess no autonomy whatsoever. In this respect, they are no different from L’Écrivain, the marvelous little eighteenth-century automaton of Pierre and Henri-Louis Jaquet-Droz. Empathy is not suggested by the realism of the forms, but by the realism of the gestures and behavior.

This type of realism creates the illusion of autonomy, of a non-mechanical life that holds surprises, the unexpected, and incites empathy. As the audience’s reactions demonstrated: the spectators laughed, cried, and experienced the feeling that the robots were speaking and moving in a subjective way. Oriza observes. “What was very impressive was that the real robots were completely different from the robots that we see on screen, in films. … Through the robots I rediscovered the power of theater: namely that someone is doing something right in front of me.14” Through the empathy that they feel toward the robots, through this immersion in the same space with machines and this resonance with their temporality, the spectator has the impression of co-presence within an unmediated intersubjective communication.

Moreover, if empathy is an emotional resonance that spontaneously arises, it is also a way of accessing the subjectivity of another. It is itself modulated by the subjectivity of the individual who experiences it, his history, his memory, and his culture. For example, I doubt that cultures that forbid pictorial representations are inclined to develop empathy for this type of representation. Japanese culture, in contrast, which attributes a principle of life to all objects, whether animate or inanimate, would tend to favor the adoption of these devices just as it has favored the culture of puppetry.

The “Realism” of Gestures

Many works play on the “realism” of gestures and behavior. This realism can be achieved at little cost through automatic techniques: the introduction of false random into the program or simply the imperfect play (in the sense of indecision, imprecision) of the mechanisms. I recall, for example, attending a performance featuring robots on stage in 1992, in Nagoya, Japan, created by the team of Rick Sayre and Chico MacMurtrie. It was during an international exhibition15 devoted to robots, in which Michel Bret, Marie-Hélène Tramus, and I had been invited to participate. The performance was entitled A participatory Percussive Robotic Experience Leading to the Birth of the Triple Dripping Fetus. A wild percussive concert was executed by enormous metallic robots, in which the deafening pneumatic and electric noises of the actuating mechanisms mixed with the thunder of the percussion. The robots, controlled by computer, possessed no autonomy. However, the jolts among the electric mechanisms and a certain uncertainty in the sequence of movements gave the spectator the illusion that they were in the presence of quasi-autonomous creatures. I myself felt this impression quite strongly at a moment during a performance when an unforeseen event occurred. One of the robots lost his arm, which landed at my feet. This incited a feeling of pity in me for the one-armed robot, which continued on without his arm. His handicap made him human in my eyes. More recently, MacMurtie introduced elements of artificial intelligence into the programming of his robots to make their actions smoother and more autonomous, but still within the limits imposed by the scenario.

In truth, the autonomy of a synthetic actor – even if it is at a very high level – or of a human actor is necessarily limited, otherwise the meaning of the play collapses. Faced with this problem, Frank Bauchard believes that there are two ways of responding. Either the actor adapts to the constraints of the stage management and the machinery – a system –, or he interacts with the machine by exchanging random information with it in a continuous loop. “At that moment,” Bauchard says, “we have something that can no longer be fixed, but that is not totally unstable. … It is the direct, it is immediacy. More and more, the question today is how does the machine create the unpredictability on stage. I think that a robot introduces the unpredictable16.”

Creating Unpredictability

Jean Lambert-Wild and Jean-Luc Therminarias, the directors of the 2001 production of the play Orgia, written in 1968 by Pier Paolo Pasolini (a “theater of words,” according to the author), chose the second response: making the actor (in this case a human) interact with the machinery (virtual beings whose images were projected on the screen). The actor was equipped with sensors that detected physical manifestations (heartbeat, skin conductivity and temperature, respiratory volume). This information, interpreted as emotional indicators by a computer, was processed in real time by a digital program (Dedalus) that allowed the actor to interact with these virtual beings – sort of imaginary marine organisms, created from models of artificial life and endowed with unpredictable behavior. Over the course of the play, the emotions experienced by the actor acted, via computer, on the behavior of the organisms, and the actor reacted in turn to the behavior of these organisms. The actor’s presence was redoubled by the presence of avatars, whose presence itself depended on the actor’s emotions, which were in turn dependent on the emotions of the audience. It was a particularly troubling, immersive situation where several temporalities coexisted and intersected.

Another way of introducing unpredictability into the play of synthetic actors is to accentuate the effect of presence and implicate the spectators themselves in the dramatic action. This is was what Bill Vorn and Louis-Philippe Demers, two Canadian artists, very recently proposed in France17 in their show Inferno, an inaugural event where robot arms were attached to the bodies of a few audience volunteers, transforming them into cyborgs. Over the course of the performance, which included several robots on stage, these spectators, while remaining free to act as they pleased, were subject to the pressures of the robot arms, which were programmed and strictly controlled. Thus, they felt mechanical gestures on their bodies, full of intent directly communicated to their sensorimotor systems. This provoked a real feeling of oppression and servitude – the goal of which, according to the authors, was to make the spectators realize that the cybernetic process can conceal a living hell. Once again we find ourselves in the paradoxical situation where the spectator feels (or can feel) an aesthetic pleasure by living an anxiety-inducing relationship.

The artistic form that authorizes a strong autonomy capable of adapting to a situation not foreseen by the programming seems to be controlled improvisation. It is with this view that Michel Bret and Marie-Hélène Tramus, with the collaboration of physiologist Alain Berthoz18, created an autonomous virtual being with the appearance of a dancer. This dancer was endowed with a network of artificial neurons, a learning function, and a body that followed the laws of human biomechanics. During the initial phase of creation, a real dancer wearing an exoskeleton that captured the movements of the articulations of her body taught the virtual dancer various dance steps, correcting her when she made an error. Once taught, the virtual dancer was linked to a real dancer equipped with motion capture technology. When the real dancer began to dance in front of the virtual dancer, the virtual being responded to her by improvising dance steps. These steps were not exactly those she had learned, but the result of a compromise between those she had learned and those executed by the real dancer. Each improvisation, according to the offerings of the real dancer, gave rise to an unmediated nonverbal communication that translated as an original “pas de deux.”

The aesthetic reception of performances in which artificial humans are “engaged” shares fundamental traits with the aesthetic reception in traditional arts: one must possess empathy, the cognitive equipment inherited through our phylogenesis. This mental and physical state occurs even during the mistakenly named “passive” contemplation of a work of art19. In the aesthetic experience, the body and cognition are never inactive. In the traditional stage arts, this empathy, because it is exerted directly on the actors in the same space-time as the spectator, is much more intense. One has the impression of a more intimate co-presence – the nonverbal communication that operates most effectively. We naturally feel we belong to the same intersubjective matrix as the actors. When artificial humans intervene in aesthetic reception, our tendency is to seek in them experiences that resonate in us. This makes us attribute our own feelings and responses to these beings, in a mirror effect. Hence the performing arts are a privileged and experimental space where, in the gratifying mode of aesthetic pleasure, natural humans mingle with and welcome into their community these beings that look so much like them.

Notes

[1] This idea was foreshadowed in the middle of the eighteenth century by Thomas Hobbes, who considered thought (in the sense of reason) as a calculation composed of words maintaining logical relationships of inclusion and exclusion among themselves.

[2] We do however, owe some incontestable successes to the IA of this era in the fields of perception (artificial vision, recognition of acoustic signals and speech), reasoning (expert systems, decision making aids, diagnostic aids, control aids, intelligent databases, digital games, etc.), language (automatic translation, analysis and word processing, indexing, classification, etc.), and action (planning, robotics, etc.).

[3] It is constructed from terms borrowed from ancient Greek: auto (the self) and nomos (the law).

[4] Other models, like genetic algorithms inspired by Darwinism (variation, adaptation through selection), or mechanical algorithms like market models, have provided and continue to provide a reservoir of extremely useful applications for the creation of virtual humans, their morphologies, their behaviors in a particular situation, or their interaction with other beings and things.

[5] This technique is used in Virtual Reality in Environnements Distribués systems. See Daniel Thalmann of the EPFL-DI-Laboratory of Infography, who suggests that we divide virtual humans into four types, online.

[6] It was initially used by the Czech writer Karel Čapek in his play R. U. R. (Rossum’s Universal Robots), first performed in 1921.

[7] See Couchot, Edmond. “Tout un peuple dans un monde miroir” in Bourassa, Renée and Louise Poissant (eds.),Personnages virtuels et corps performatif–Effets de presence. Montréal: Presses de l’Université du Québec, 2013.

[8] See Harold Rosenberg: The De-definition of Art, Copyright 1972 by Harold Rosenberg. This work was translated into French as La Dé-définition de l’art, trans. Christian Bounay, Nîmes: Jacqueline Chambon, 1992.

[9] See Decety, Jean. “L’empathie est-elle une simulation mentale de la subjectivité d’autrui ?” in Berthoz, Alain and Gérard Jorland (eds.), L’Empathie. Paris: Odile Jacob, 2004.

[10] Smith, Adam. An Inquiry into the Nature and Causes of the Wealth of Nations, 1776. In fact, Adam Smith is reworking an idea of David Hume who, in his Treatise of Human Nature (written 1739-40), had already seen in sympathy a means of intersubjective communication allowing us to put ourselves in the place of others and share in their suffering or their joy.

[11] See Freedberg, David and Vittorio Gallese. “Motion, Emotion and Empathy in Aesthetic Experience,” Trends in Cognitive Sciences, vol. 11, 2007, p. 197-203.

[12] On the question of empathy, see Couchot, Edmond. La Nature de l’art – Ce que les sciences cognitives nous révèlent sur le plaisir esthétique, Paris: Hermann, 2012, ch. V, “L’empathie dans la communication intersubjective,” pp. 149-175.

[13] A length imposed by the battery life.

[14] See Boudier, Marion. “Un mois avec Oriza Hirata,” Agôn, online.

[15] An exhibition entitled “The Robots-Man and Machine at the End of 20th Century,” 1992, Nagoya, Japan.

[16] Boudier, Marion. op. cit.

[17] The performance took place at the Maison des Arts de Créteil, on the 14th and 15th of April 2015.

[18] On this subject, see Bret, Michel, Marie-Hélène Tramus and Alain Berthoz. “Interacting with an Intelligent Dancing Figure: Artistic Experiments at the Crossroads between Art and Cognitive Science,” LEONARDO, vol. 38, no. 1, February 2005, p. 46-53.

[19] See Couchot, Edmond. “La boucle action-perception-action dans la réception esthétique interactive,” Proteus, no. 6, “Le spectateur face à l’art interactif,” 2013.

Bibliographie

– Bernard, Anaïs et Bernard Andrieu, «Corps / Cyborg» dans Marc Veyrat et Ghislaine Azémard, 100 Notions pour l’art numérique, Paris, Les Éditions de l’immatériel, 2015, 266 p.

– Boudier, Marion, «Un mois avec Oriza Hirata», Agôn, en ligne, <https://journals.openedition.org/agon/index.php?id=1141>.

– Bret, Michel, Marie-Hélène Tramus et Alain Berthoz, «Interacting with an Intelligent Dancing Figure: Artistic Experiments at the Crossroads between Art and Cognitive Science», LEONARDO, vol. 38, no 1, février 2005, p. 46-53.

– Changeux, Pierre, Raison et plaisir, Paris, Odile Jacob, 1994, 226 p.

– Chazal, Gérard, Le Miroir automate, Ceyzérieu, Champ Vallon, 1995, 253 p.

– Couchot, Edmond, «La boucle action-perception-action dans la réception esthétique interactive», Proteus, no 6, 2013, p. 27-34.

– Couchot, Edmond, La Nature de l’art – Ce que les sciences cognitives nous révèlent sur le plaisir esthétique, Paris, Hermann, 2012, 310 p.

– Couchot, Edmond, «Tout un peuple dans un monde miroir » dans Renée Bourassa et Louise Poissant, Personnages virtuels et corps performatif–Effets de presence, Montréal, Presses de l’Université du Québec, 2013, p. 47-66

– Decety, Jean, «L’empathie est-elle une simulation mentale de la subjectivité d’autrui?» dans Alain Berthoz et Gérard Jorland, L’Empathie, Paris, Odile Jacob, 2004, p. 53-88.

– Freedberg, David et Vittorio Gallese, «Motion, Emotion and Empathy in Aesthetic Experience», Trends in Cognitive Sciences, vol. 11, 2007, p. 197-203.

– Gallese, Vittorio, «Intentional Attunement. The Mirror Neuron system and its Role in Interpersonal Relations», Interdisciplines, en ligne, <https://www.interdisciplines.org>.

– The Robots-Man and Machine at the End of 20th Century, Exposition, Nagoya, Japan, 1992.

– Rosenberg, Harold, The De-definition of Art., Copyright 1972 by Harold Rosenberg.Simondon, Gilbert. Du mode d’existence des objets techniques. Paris, Aubier, 1969.

– Stern, Daniel, Le moment présent en psychothérapie. Un monde dans un grain de sable, Paris, Odile Jacob, 2003, 304 p.

– Thalmann, Daniel, «Des avatars aux humains virtuels autonomes et perceptifs».